App Store A/B Testing Timeline: from Research to Follow-Up Experiments

Liza Knotko

Liza Knotko  Liza Knotko

Liza Knotko Intelligent ASO is capable of increasing conversion without extra traffic expenses and app A/B testing is its core ingredient. Once you decided to optimize your product page, there’s no use applying random changes in the app stores and waiting for phenomenal results straight away.

The truth is, you never know what will work and what will deteriorate your app’s performance. That’s why app A/B testing is a must when it comes to ASO.

Basically, A/B testing is a method of hypothesis checking. You distribute traffic equally among two or more variants of your app store page element (icon, screenshots, etc.). Each group represents the whole audience and behaves like an average user would. Thus, you identify a variation with the best performance.

Download a FREE app A/B testing workflow template

Split-testing doesn’t only result in app conversion rate improvement, it also assists in the validation of various audience segments, traffic channel, and product positioning. Pre-launch experiments may also help in product evaluation and ideas qualification.

When you’re an A/B testing newbie, it might be hard to know where to begin. Indeed, A/B testing is not as straightforward and easy as it may seem at first. However, there are certain steps which are integrant parts of any A/B testing activity.

Let’s review each step of a classic A/B testing workflow. Any A/B testing timeline should include the following action points:

Duration: 2 days – week

Let’s make it clear – leading companies don’t A/B test random changes these days. They’d rather use research and elaborate strong hypotheses based on the collected data. And, as we know, a solid hypothesis is a cornerstone of any A/B testing success.

Here’s the list of core research activities made by Gabe Kwakyi from Incipia that can help you embark upon this highly important phase.

It’s preferable to focus on the keywords that have the highest volume and for which your app ranks best for, as these keywords will have the highest contribution margin to your organic downloads.

Think over what statements you can make that your competitors cannot. Analyze the advantages your rivals possess and how you can overcome them in order to convince users to download your app.

Try to focus on features that are marked most useful by users, inspect the most recent reviews for better results.

Duration: a couple of hours

Your variations for A/B Testing should always reflect a quality hypothesis. Form your hypothesis and create variants after researching how your app can be best positioned relative your competition.

The ASO guide assists in setting your goals and choosing the type of an experiment you want to launch.

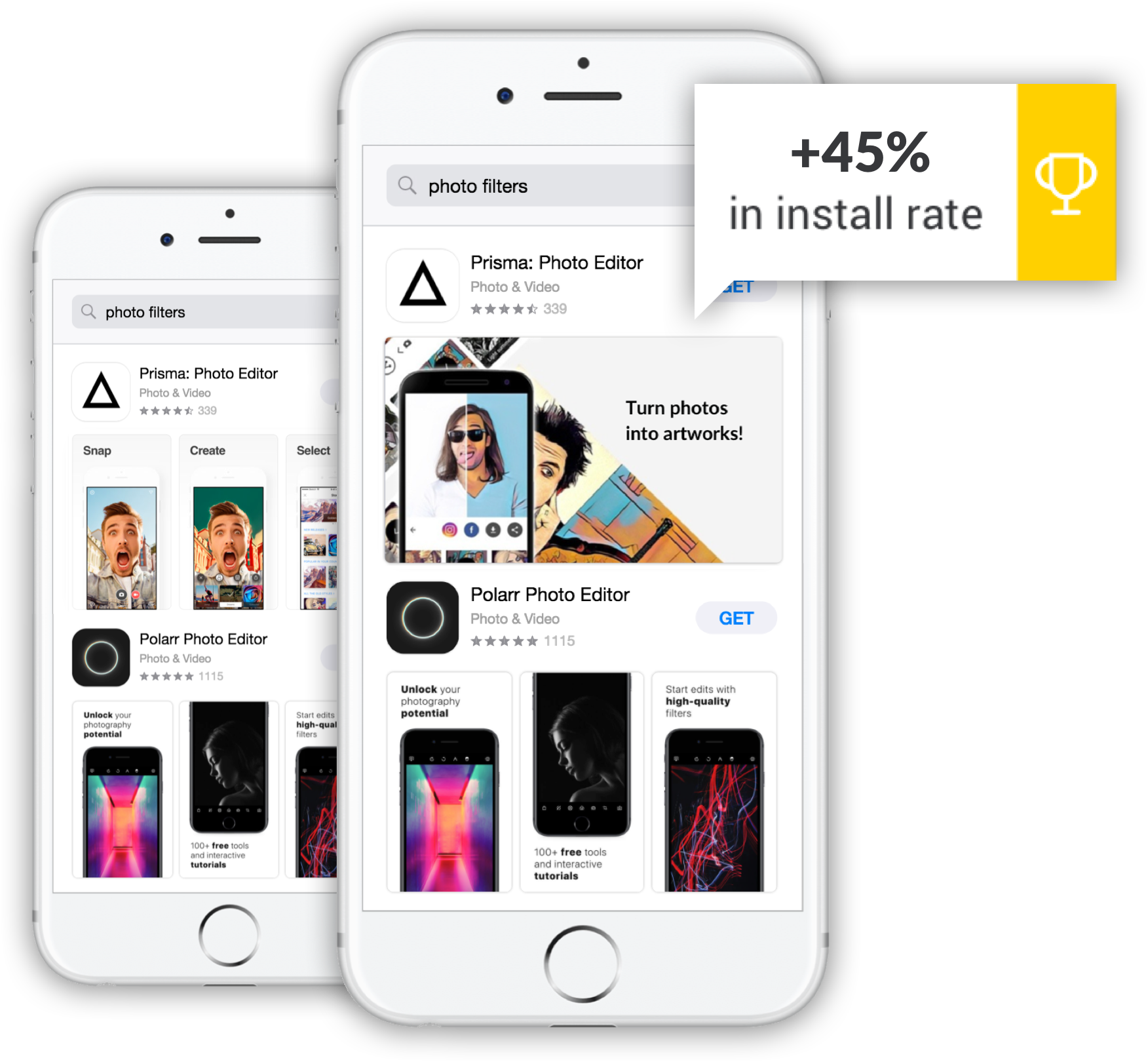

Keep in mind that hypothesis is not a question, it’s a statement which suggests a solution, its expectation effect, and its rationalization. For example, Prisma decided to test the following presumption based on the app category best practices:

Using a bolder font in captions placed on the top of screenshots triggers conversion growth due to better readability.

Thus, on this step, you’d also want to think over variations layout based on the hypothesis you plan to test and prepare technical design specification.

Duration: 1 day – a few weeks

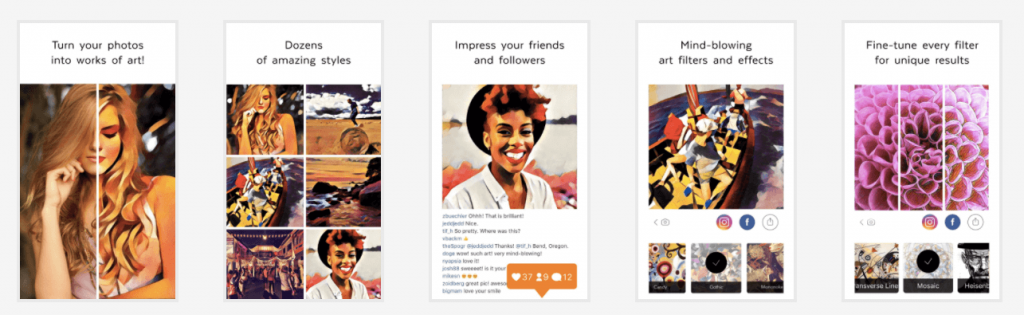

It’s the phase where designers create layouts for the upcoming A/B testing. The design is to reflect presumptions under the test and correspond to the technical design specification.

The duration time of this phase depends on the workload of your in-house designers. Companies that don’t have a staff designer have to resort to third-party solutions which, naturally, takes more time.

Duration: 7-14 days

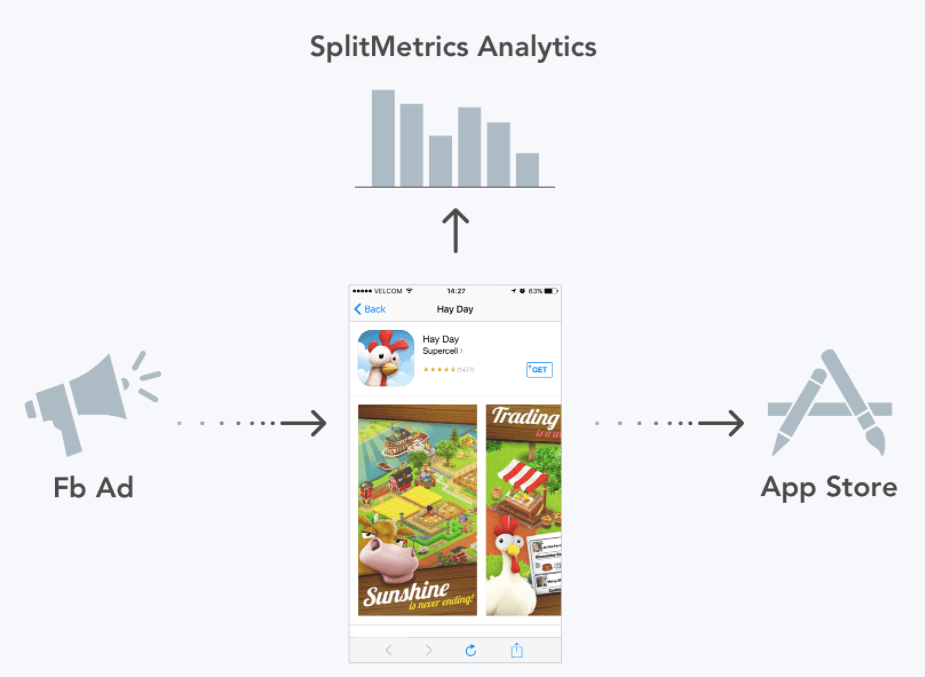

You can proceed with A/B testing itself once the first 3 steps are completed. It’s vital to choose a traffic source and appoint a target audience before launching an experiment. Remember that users are to be split equally.

A/B test won’t even bother you if you decide to test with SplitMetrics, the tool which will distribute audience members automatically landing these users on two different variations.

All you have to do is fill your experiment with quality visitors and the platform will do everything else for you:

It’s recommended to run split-tests for at least 7 days to keep track of users behavior on all weekdays.

Duration: a few hours

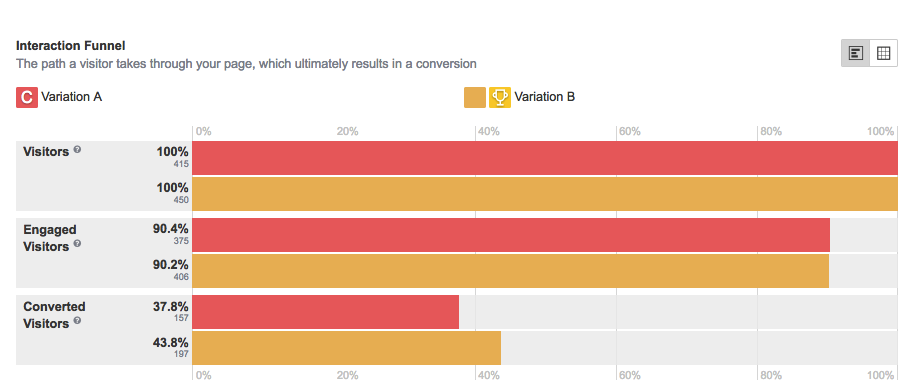

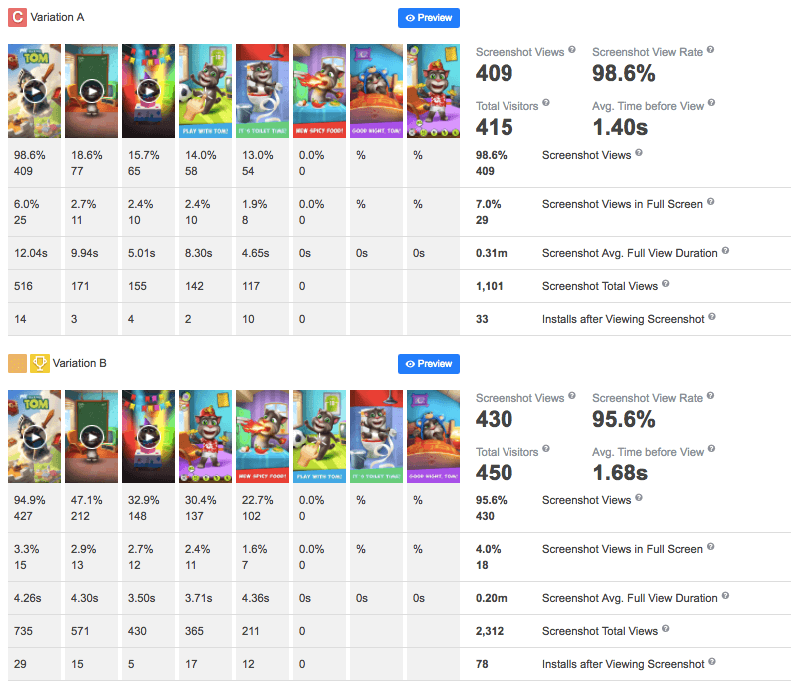

You can finish your test and start analyzing its results at reaching a statistically significant number of active users who visited the experiment. If you run your test with SplitMetrics the system identifies the winner automatically when the experiment reaches 85% confidence.

Upon reaching the trustworthy confidence level, your original hypothesis will be either proven or refuted. It’s worth mentioning that a disproved hypothesis doesn’t mean that your A/B testing ended in a fiasco. Quite the opposite.

Negative results prevent us from making changes that could cost us thousands of unseized installs.

Conversion changes analysis is a must, there’s no denying. Yet, don’t forget to spare time to explore other metrics which will help you understand your users better:

Duration: 1-2 months

Providing you find a clear winner, you can implement the results in the App Store straight away. It goes without saying that you should track the conversion changes after the upload of the optimized product page elements.

If you stay consistent with your A/B testing activity, the results will speak for themselves. Nevertheless, your conversion won’t change overnight. It normally takes 1-2 month to see a new trend gain ground.

Duration: 3-4 months

It’s crucial to turn A/B testing into an essential part of your ASO strategy. The truth is that app stores are ever-changing systems subject to constant alterations.

It’s critically important to run follow-up experiments to scale results.

If you want to reap the maximum benefits from A/B testing your product page, mind the following golden rules:

When it comes to app’s conversion, there is always room for improvement and well-structured approach to A/B testing can help you become an App Store highflyer. However, as in all things, success requires time, dedication, and persistence.

Download a FREE app A/B testing workflow template