Beginner’s Guide to A/B Testing for ASO on the App Store & Google Play

Gabriel Kuriata

Gabriel Kuriata  Gabriel Kuriata

Gabriel Kuriata A/B testing for App Store Optimization (ASO) is the process of comparing two or more variations of an app’s store listing or product page to determine which version performs better. The endgame is achieving a higher conversion rate (CR), which leads to more app downloads and, consequently, better profitability for any user acquisition channel.

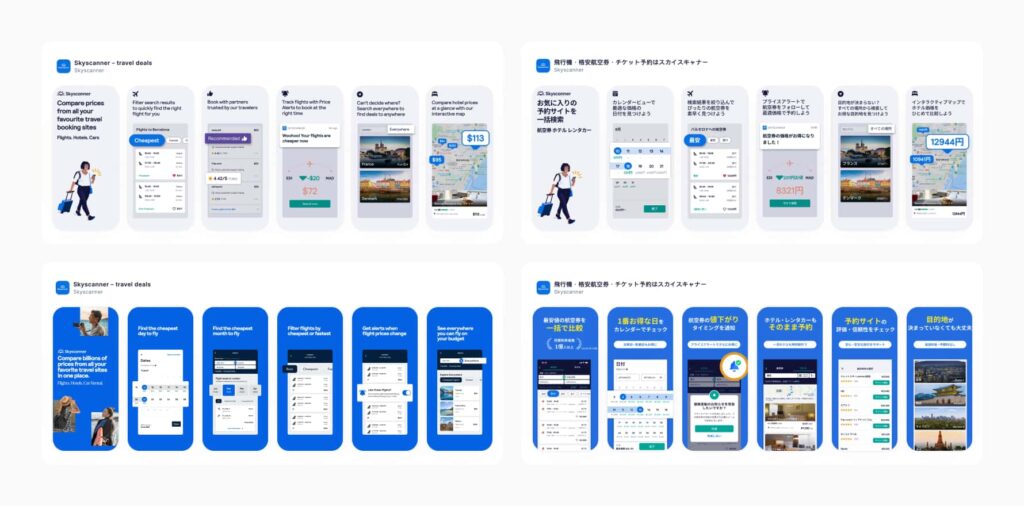

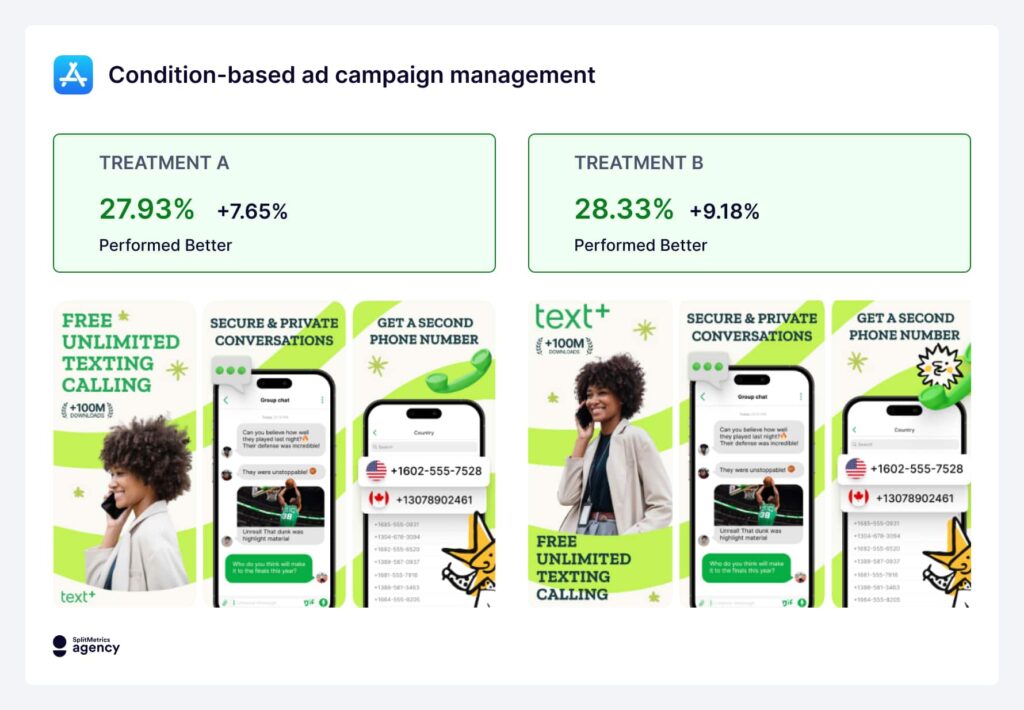

More than 119K installs from a single screenshot change? The case of SplitMetrics Agency’s ASO and A/B testing for texPlus shows it’s possible.

A/B testing enables data-driven decisions, eliminating costly and time-consuming guesswork. Both leading app marketplaces, the App Store and Google Play, offer their native A/B testing functionalities. Advanced solutions like SplitMetrics Optimize significantly expand them, offering more metrics and options, like pre-launch testing.

There are enough A/B testing methods that any app should consider them an essential part of its ASO strategy and replicate the agency’s success for texPlus (or many more). Here’s how to do it.

A/B testing for app store optimization (ASO) on Google Play and the App Store is focused on optimizing a product page or a store listing’s impact on the conversion rate. It’s relevant for both organic and paid user acquisition.

In general, A/B testing is a critical component of understanding your audience in a target market, having a significant impact on app localization and seasonal updates.

A/B testing will increase your CR and ROI because it will allow you to ground your design in real, current data, resonating with your audience best.

The impact of A/B testing for ASO is especially powerful for Apple Ads or Google’s App Campaigns. For example, careful optimization of creative components of a product page can lead to a synergistic effect between ASO and Apple Ads, as search results ads utilize product page creatives directly, and overall product page performance is a factor in determining their relevance.

To summarize, A/B testing for ASO is crucial, as it can impact all user acquisition channels. Without further ado, let’s examine how they can be run.

There are two methods of running A/B tests for ASO:

On the App Store, it’s Product Page Optimization (PPO), a feature of App Store Connect. On Google Play, Store Listing Experiments are available in the Google Play Console.

In general, an ASO specialist or an advertiser can A/B test any component of a product page or a store listing. Naive features have some limitations. For example, the app’s name can only be changed with a significant update to the app. Here’s a brief comparison of these native features with SplitMetrics Optimize, our A/B testing platform:

| Element to A/B Test | App Store (PPO) | Google Play (CSL) | SplitMetrics Optimize |

| App icon | Yes | Yes | Yes |

| Screenshots | Yes | Yes | Yes |

| App previews (Videos) | Yes | Yes | Yes |

| App name | No | No | Yes |

| Subtitle | No | Not Applicable | Yes |

| Short description | Not Applicable | Yes | Yes |

| Full description | No | Yes | Yes |

| Feature graphic | Not applicable | Yes | Yes |

| Localization | Yes | Yes | Yes |

| Number of variations | Up to 3 | Up to 3 | 8 |

| Audience | Random segment of organic users | Random segment of all users visiting the listing | Any paid UA channel |

| Review process | Yes | Yes | None |

| Test duration | Up to 90 days | Minimum 7 days | Methodology-dependant |

There are two things to remember:

There are more caveats to using these free native services, primarily in what they don’t do. Let’s compare them with our platform in more detail.

Let’s consider the following:

| Feature | SplitMetrics Optimize | Native feature |

| Pre-Launch Testing | Yes | No |

| Testing methodologies | Bayesian, Sequential, Multi-Armed Bandit | Bayesian |

| Approval Process | No | Yes |

| Targeting & Traffic Control | ICP Targeting, Traffic Routing, Custom Requests | No |

| Result Accuracy | Level of confidence under control | High level of inaccuracies |

| Testing Environments | Product Page/Store listing + Search & Category (competitive environment) | Product page or store listing |

| User Behavior Analytics | 50+ Detailed Metrics | Limited (3 metrics) |

| Simultaneous Experiments | Unlimited | Limited |

Running A/B tests with an external service provides more flexibility in choosing traffic sources, allows for testing a product page before an app is even released, and ensures that the results are statistically significant. Detailed performance metrics will enable testers to draw meaningful conclusions about user experience and usability, even from tests that negate a hypothesis or end without a decisive winner.

Custom product pages (App Store) and custom store listings (Google Play) are alternative versions of your app’s main product page, created to target specific user segments.

These are used primarily to align the content a user sees on the app store with the content they saw in an ad or a specific marketing channel, thereby creating a more personalized experience and increasing the conversion rate from an ad click to an app install.

Their versatility and flexibility mean that app marketers effectively utilize them to try different designs or run A/B-style tests (an actual A/B test requires splitting a single, randomized audience to show them different variants, with all other conditions being equal). There is one thing to consider, though.

An advanced A/B testing platform running tests under scientific rigor will offer the best, statistically sound results with high confidence. Different testing methodologies help make even elaborate experiments relatively short.

The key argument with experiments done with CPPs or CSLs is convenience. You simply introduce new designs for particular keywords or advertising channels and try them out one after another.

You can A/B test your app even when it’s nothing more than a few sketches by your designer or visualisations prepared with the help of generative AI, provided you have the right solution that enables that. When you choose to A/B test, your app bears a significant impact on methodology.

Pre-launch A/B testing is possible with external, specialized tools like SplitMetrics Optimize, significantly contributing to an app’s development or even conceptual phases. It can be used to determine which creative direction to take.

The capability extends to A/B tests shortly before a release, during a beta phase, where the app’s App Store or Google Play presence is fine-tuned to maximize the impact of a launch.

The most critical times to consider A/B testing for your app’s store listing are before major holiday seasons and during periods of significant app updates.

However, A/B testing can and should be a continuous process integrated into your strategy, as you will likely evolve your pages/listings in iterations.

Both Product Page Optimization (PPO) in App Store Connect and Store Listing Experiments in Google Play Console utilize a fixed methodology to run A/B tests, which is warranted by their focus.

For comparison, SplitMetrics Optimize employs multiple A/B testing methodologies to analyze data and determine a winner. Each has its own strengths and is suited for different types of tests (for example, the multi-armed bandit is optimal for seasonal experiments). Here’s a comparison of A/B testing methodologies available on the platform:

| Bayesian | Sequential | Multi-armed bandit | |

| Best for | Live apps, periodic & minor changes | Pre-launch, big changes & ideas | Seasonal experiments |

| Traffic allocation | Randomly & equally | Randomly & equally | More to the best variation |

| Interpretability | Improvement level, winning probability, chance to beat control | Improvement level, confidence level | Weight |

| Key benefit | The golden middle methodology | High confidence | Speed & low cost |

Choosing the proper methodology for a goal will help optimize the costs of acquiring traffic for tests, as well as increase the confidence level of your results. Their inclusion reflects the wider range of possibilities SplitMetrics offers.

Not all components of a product page or a store listing are equally important for conversion rate uplift, and the fact that you can doesn’t mean you should. After countless tests run by our team, we can confidently point to the elements of pages/listings as critical, in this order:

Additionally, the feature graphic on Google Play may be another critical asset for that marketplace, while the App Store’s designs should put special emphasis on effective app screenshots.

How to identify and select elements for testing? How to get statistically significant results, especially with low traffic, for as little money as possible?

The technical side of running an A/B test is easy; designing and implementing an actual test requires a solid framework that puts creativity on the right track. Let’s take a look at the official A/B testing & app validation framework, followed by SplitMetrics:

The official SplitMetrics’ app validation and A/B testing framework:

1. Research your competitors, market, and audience;

2. Ideate all the elements you could test;

3. Form a hypothesis;

4. Select variations;

5. Design an experiment;

6. Analyze the results;

7. Share your findings;

8. Go back to stage 3!

First, it begins with research, with ASO competitive research being a critical part. Creative optimization with A/B testing for ASO never takes place in a vacuum. A product page should be immediately understood within its category, yet be distinct enough to capture attention and incentivize a download.

Second, following this order will guide your designers to make changes with potential impact on conversion rates, grounded in data and a good understanding of user preferences and journeys.

What you want to test largely depends on when you’re running your experiments. For example, while prototyping, you may compare significantly different designs, even multiple ones, as a form of market research. Rockbite’s mobile game validation mentioned earlier is an excellent example of this practice.

A/B testing for a live app is an iterative process, where the elements with the most significant impact are selected first. Logically, these may be the focal points of each component:

A reasonable hypothesis connects a specific element to a change in conversion rate. It largely depends on what particular product page creative you’re testing, so let’s review them and provide some examples.

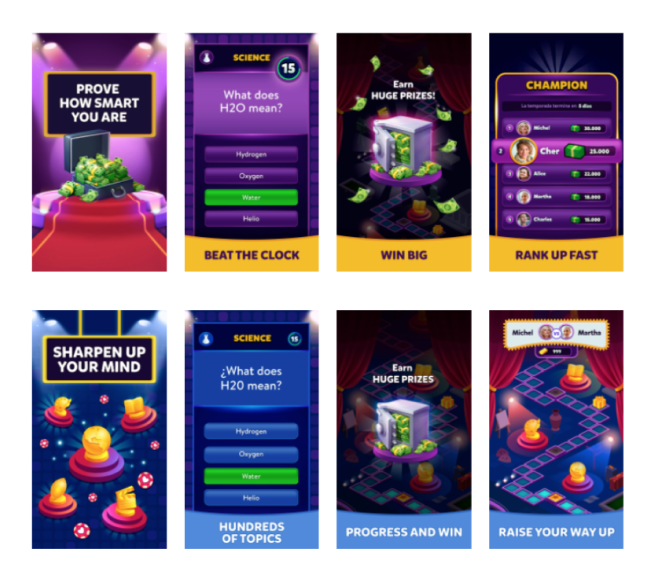

Screenshots, with design grounded in data and user preferences, are critical for conversion rates. Visible in both search results and on the product page, the right choice of screenshots can have a tremendous impact on your results. Here are some examples of what you can test:

Visual hierarchy played a significant role in the success of the new variation for texPlus. The logo’s bigger and easier to spot. For apps with an established history or strong brand recognition, it’s usually more effective to highlight the branding in the first screenshots a bit more. Additionally, there’s a better contrast in the benefit message. The value “Free unlimited texting & calling”) was easier to read in a split second. The new pose of the person guides attention more effectively across the logo and benefit text, improving overall screenshot readability. All those changes add up because users only spend a couple of seconds deciding whether to scroll past or download.

Screenshot optimization is critical on the App Store, where the first one occupies a significant part of the screen. Additionally, device uniformity incentivized some unique design patterns (like panoramic screenshots) that might not work as well on Google Play. It’s one of many reasons to creat these marketplaces individually in terms of screenshot optimization.

A sound app icon communicates the purpose of your app immediately, while standing out from the crowd. It’s essential to track and optimize tap-through and conversion rates. Here are some directions your A/B tests can go:

A/B testing a preview video is about optimizing the first dynamic impression a user has of your app. These videos are crucial because they autoplay silently, receive priority placement over screenshots, and can quickly convey your app’s core value. Here are the key elements to an A/B test for your app preview video:

Additionally, consider testing whether to use a preview video at all, because not all apps need them. For highly focused apps, a screenshot might well do the trick.

Choosing the right paid user acquisition (UA) channels for A/B testing when there is insufficient organic traffic to meet the minimum sample size criteria in a reasonable time, or there can be no organic traffic, as with pre-launch tests.

Not all advertising platforms will be equally effective for all apps. The channels selected should align with your app’s target audience. For example, if your app is a short-form video editing tool, your target audience is likely active on TikTok and Instagram, making these platforms high-priority for testing.

Ad channel validation is also a significant direction of A/B testing. You can sample audiences from a multitude of channels before ultimately committing your resources to the most profitable ones and suitable for your particular type of app.

You should run an A/B test long enough to reach a specific level of confidence in the results. There’s actually quite a lot of science behind that question and what the minimum sample size is for effective and accurate tests. SplitMetrics Optimize operates on these principles, automating the process, leaving you with only the task of driving traffic through any means you need.

In the case of our platform, the time required to reach a specific confidence level depends on the A/B testing methodology, which in turn should be tailored to the thing being tested. The design of the test itself can also have an impact. Significant differences between variations may contribute to the result emerging more quickly and decisively.

The rule is that high traffic will make your tests shorter. In general, quick, multi-armed bandit tests before holidays can be resolved in a week. Complex, sequential tests can run for a month. Custom product pages can be swapped in one-month cycles.

How to interpret the results of an A/B test? Let’s examine three possible outcomes:

Analyze any performance metrics you can on the variations to learn as much as you can about your audience, regardless of test results. Screenshot scroll depth, product page scroll depth, taps. All of these can push you toward a new hypothesis. This is the reason SplitMetrics Optimize’s additional metrics make each test a success, as they leave you with important insights about your audience.

A/B testing may seem complicated, but it’s not, provided you organize your work within the proper framework. Mastered, it can unlock spectacular results, critical to the effective and profitable scaling of your app. To wrap up, here are 10 tips for A/B testing for beginners, capturing the message of the article:

A/B testing is vital for app growth, transforming guesswork into data-driven success. By embracing continuous optimization and leveraging powerful tools like SplitMetrics Optimize, you can unlock your app’s full potential. Start experimenting today to maximize conversions and achieve profitable scaling!