Category and Search A/B Tests: How to Improve App Store Search Ranking

Liza Knotko

Liza Knotko  Liza Knotko

Liza Knotko Ever thought how category and search ranking affects your app’s conversion rate? Taking into consideration that about 65% of downloads are the result of a search in the App Store, the most successful mobile marketers never disregard the optimization of their apps for competitive surrounding.

If you aim to improve App Store search ranking, a consistent mobile A/B testing strategy is a must. Search and category split tests should become an integral part of such strategy and today we’ll discuss how to run this kind of A/B experiments to guarantee the best possible results.

The majority of ASO hackers in big companies use App Store A/B testing for their product pages on a regular basis. Indeed, the experiments in the context of app’s product page should be your top priority if you rely on paid traffic skipping search in the App Store.

However, if your goal is to distill the secret to harnessing organic traffic, focus on search and category tests.

You can also try to test search in App Store with the help of Creative Sets but mind that this method has quite a few limitations.

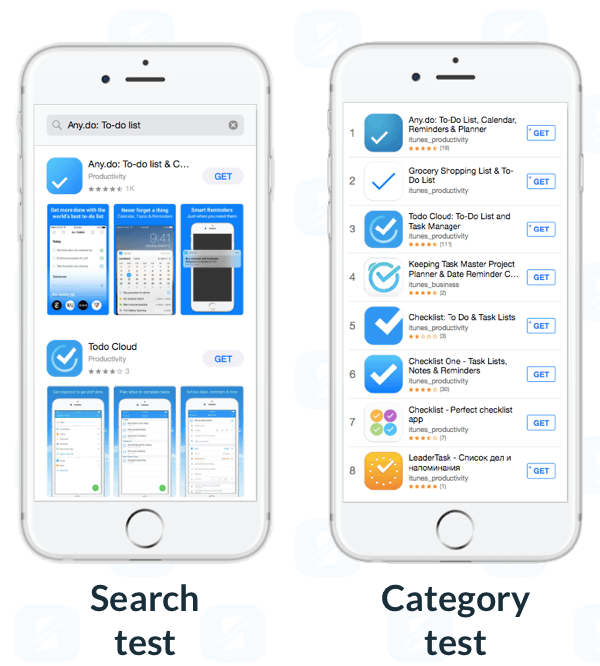

Search and category tests empower marketers to optimize conversion beyond their product pages, providing context for collecting data on the whole journey of app store visitors. We all know that before getting to your product page, users first choose from dozens of similar apps and your task here is to make your app stand out.

Search and category experiments are subject to all classic rules for valid mobile A/B testing:

When it comes to A/B testing workflow, search and category experiments are not that different. They require classic App Store split testing steps:

Nevertheless, A/B testing of search in app store has certain peculiarities concerning the preparation stage and results interpretation.

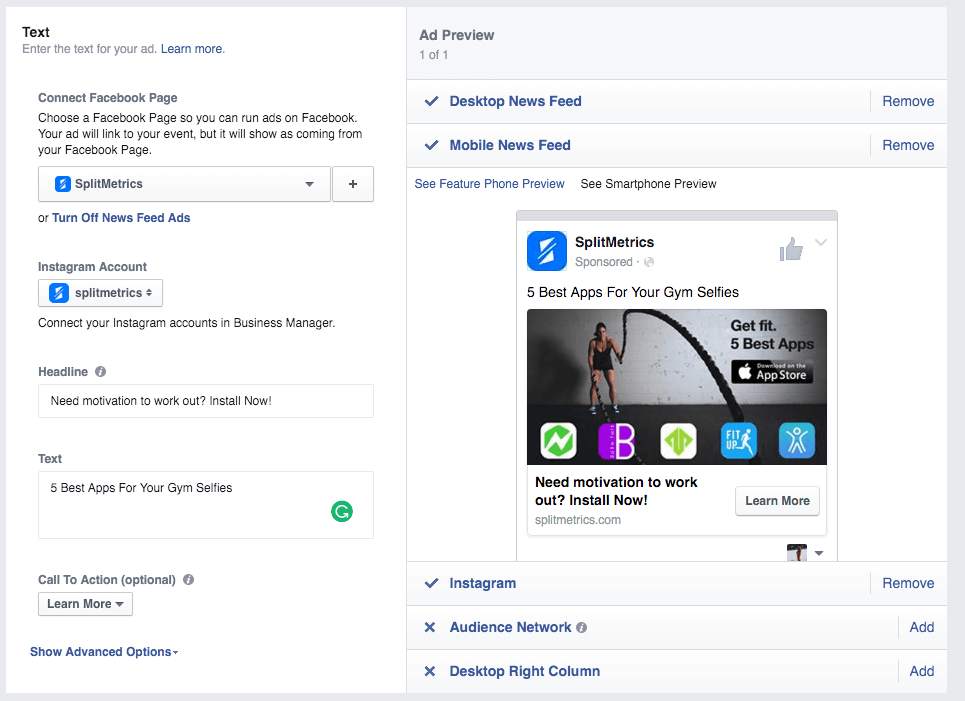

First and foremost, you should pay extra attention to an ad banner you’re going to use. It can’t be a blatant ad of your app. After all, users will find themselves on a page emulating search in App Store after clicking it. So make a bit more general.

Imagine you want to test your fitness app against its competitors. So it’s necessary to create a banner which doesn’t promote solely your app but a few apps from your category. You are to opt for captions of more generic character, for example, ‘Get Fit. 5 Best Apps’.

Thus, when a user is redirected to a search page it will be relevant.

Another important aspect of the preparation stage is the choice of competitors. You need:

If you decide to run a Search experiment using a certain keyword, it makes sense to research the App Store search results and duplicate all competitors and their order. It’ll make your test as true to life as possible.

However, there are cases when mobile marketers intentionally run A/B tests surrounding their app only with weak or strong competitors to perform a market research and evaluate the position of their app.

Big brands usually don’t change apps order in the context of search and category tests to achieve maximum relevancy. At the same time, smaller publishers prefer to launch experiments against industry leaders to find out what can help their app stand out.

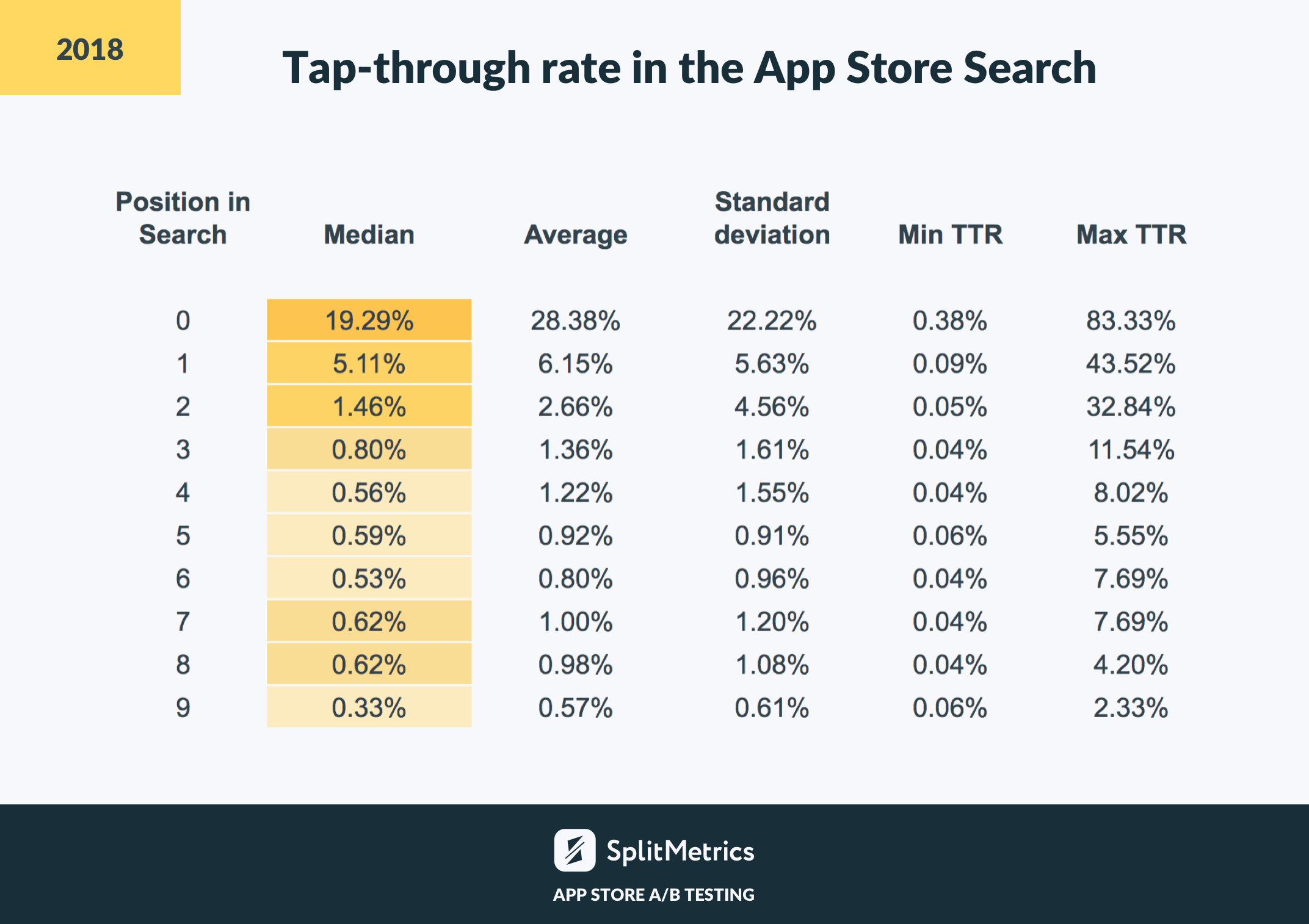

Mind that lower position in the App Store Search doesn’t automatically mean lower conversion. Sure, the first position guarantees the best tap-through rate (28.38% on average). Yet, our latest research showed that the 8 and 9 positions in the App Store Search can boast better average TTR than the 6 and 7 (1% and 0.98% against 0.92% and 0.80%).

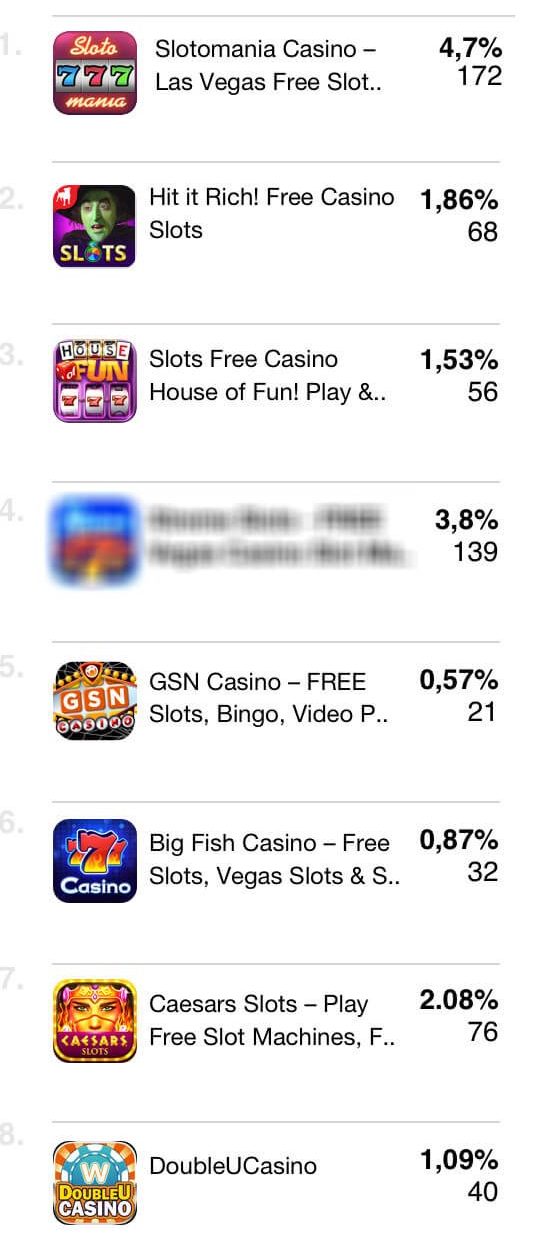

Thus, as you go further down the page, the results are not as obvious. For instance, one of our clients ran a test for their Slots app against competitors in the same category. Surprisingly, the 4th position performed better than the 2d and 3d in the course of that category experiment proving once again that higher position doesn’t equal to better conversion.

When you decide to run a search or category test, keep in mind that such experiment types require more traffic than classic product page split tests. The thing is you need more users to get enough clicks in competitive surrounding and reach a decent confidence level.

Other peculiarities concern the interpretation stage. Launching your search and category tests with SplitMetrics, you don’t only ensure trustworthy results with minimum effort but also get App Store search insights for further analysis.

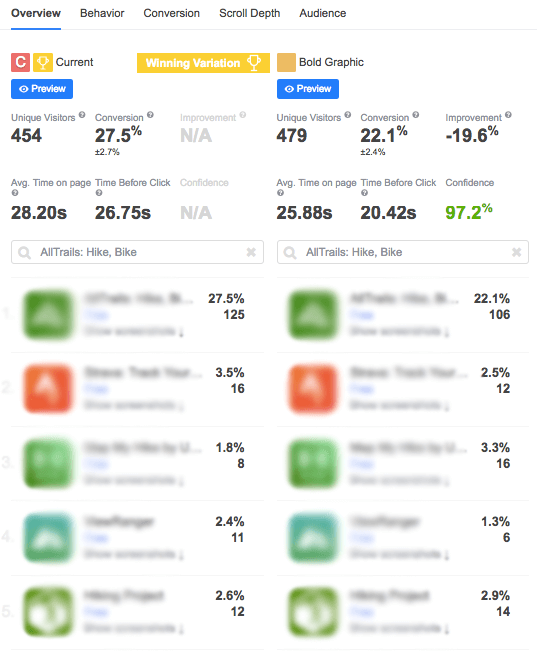

When you get down to the analysis of test results, don’t forget to pay attention to the performance difference of your variations and compare your conversion rates with ones of your competitors. This can help you identify elements that predetermine the app’s success in the App Store search.

For example, imagine that an A/B test identified that 2 of your closest competitors have a slightly better conversion rate. It’s a call to find common features of their listings (a certain word in an app name, the similar facial expression of a character, similar caption, etc.). Then try to incorporate these findings in your next iteration.

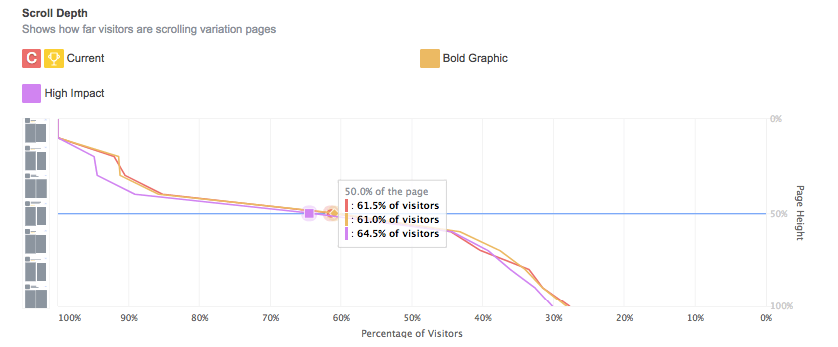

If your app doesn’t occupy the top position, it’s highly important to check Scroll Depth to find out whether users scroll search results deep enough to see your app. In the case of true-to-life apps order in your A/B experiment, you can get a clearer picture of how your app feels in a competitive surrounding.

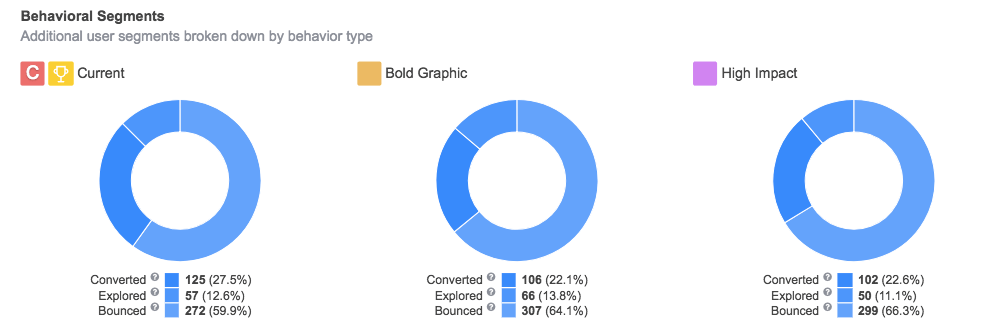

SplitMetrics gives you a chance to understand users behavior in the context of the App Store search.

‘Bounce rate’ is one of the first metrics you are to check. In the majority of cases, it is an “acid test” of your ad banner relevancy. High bounce rate is a sure sign of discrepancy between the message of your ad banner and the content of your page under test.

At finishing the initial A/B test and getting distinct App Store search analytics, it’s recommended to launch a follow-up experiment in the context of your app’s product page. Thus, you’ll be able to see how altered elements influence app’s performance if a user skips the search phase.

At times different store elements work better in different contexts. For instance, an icon with blue background rocks App Store search results while green background favors conversion growth within product page. Quite a dilemma, isn’t it?

In such cases you have to sort out your priorities:

Search and category A/B tests give you a chance to kill two birds with one stone: you improve your conversion rate while collecting competitive intelligence.

Such split tests give you actionable data to see the bigger picture as you follow visitors’ journey from category page to app’s landing. Experiments with various scenarios give valuable App Store search analytics about how to optimize your page to beat the competition.

Furthermore, if you invest in Apple Ads, App Store search optimization results in increased conversion which favors the improvement of your ads performance without extra expenses.

However, it’s nearly impossible to launch a search or category without a specialized platform like the one SplitMetrics provides. Getting insightful metrics on user behavior, conversion, scroll depth, audience and many others, you’ll be able to elaborate victorious growth strategy for your apps.